The cameras we know shoots images and the final jpeg or raw image is available for our visual delight.

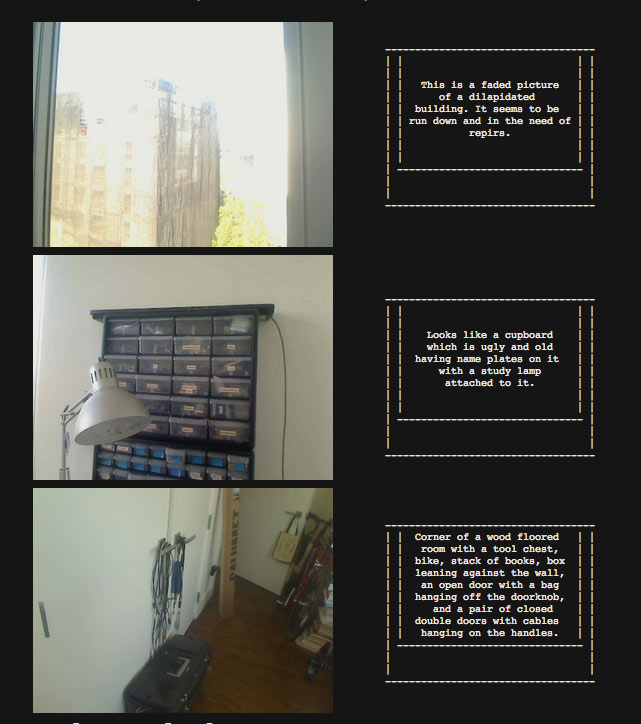

How about a Camera that shoots a scene and outputs MetaData description of what it sees. e.g. Shoot a room full of people, and it tells in detail about kind of audience, etc in the room. The camera is called Descriptive Camera and does exactly what it says.

Its like Google Goggles Visual search in a way, point your smartphone camera and tells you what you’re looking at. But Descriptive Camera goes further, it generates Textual description of the scene with manual human intervention for higher accuracy.

The Descriptive Camera works a lot like a regular camera—point it at subject and press the shutter button to capture the scene. However, instead of producing an image, this prototype uses crowd sourcing to output a text description of the scene. Modern digital cameras capture gobs of “parsable” metadata about photos such as the camera’s settings, the location of the photo, the date, and time, but they don’t output any information about the content of the photo. The Descriptive Camera only outputs the metadata about the content.

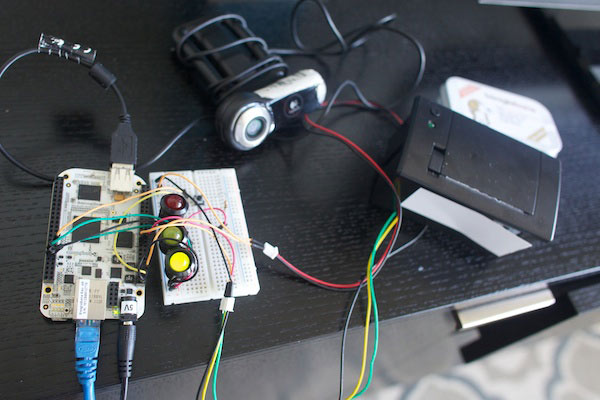

Descriptive Camera in action

Another application of this camera would be to generate meta-data for all your existing photos. We often have a pile of digital photos, but when combined with this open source project, you will be able to search them based upon description. e.g. search for photos with Bridge in them. information about who is in each photo, what they’re doing, and their environment could become incredibly useful in being able to search, filter, and cross-reference our photo collections. This isn’t exactly a totally new invention, same meta-search works on Google Drive app on iOS, Android. However, this is the first open project.

Of course, we don’t yet have the technology that makes this a practical proposition, but the Descriptive Camera uses crowd sourcing to explore these possibilities.

Technology Behind Descriptive Camera

Descriptive camera, at its heart, runs based on Amazon’s Mechanical Turk API. It basically allows a developer to submit Human Intelligence Tasks (HITs) for workers on the internet to complete. The developer also sets the price they’re willing to pay for the successful completion of each task. For faster and cheaper results, the camera can also be put into “accomplice mode,” where it will send an instant message to any other person. That IM will contain a link to the picture and a form where they can input the description of the image.

The descriptive camera runs on embedded Linux platform: BeagleBone. There is an onboard camera USB webcam, a thermal printer from Adafruit, a trio of status LEDs and a shutter button.

The core application that does task of capture and processing is written as python scripts. mrBBIO module is used for GPIO control (the LEDs and the shutter button), and open-source command line utilities to communicate with Mechanical Turk. The onboard printer is a compact portable dot matrix printer interfaced via USB.

At the moment, device has no wireless capabilities, and solely relies on cheaper alternative: wired ethernet.

When the shutter button is released, a yellow LED indicates that the results are still “developing”. With a HIT price of $1.25, results are returned within 3-6 minutes.

We write latest and greatest in Tech Guides, Apple, iPhone, Tablets, Android, Open Source, Latest in Tech, subscribe to us@geeknizerOR on Facebook Fanpage, Google+:

loading...

loading...