AMD has announced 3rd Generation CPU core for its Bulldozer architecture: Streamroller.

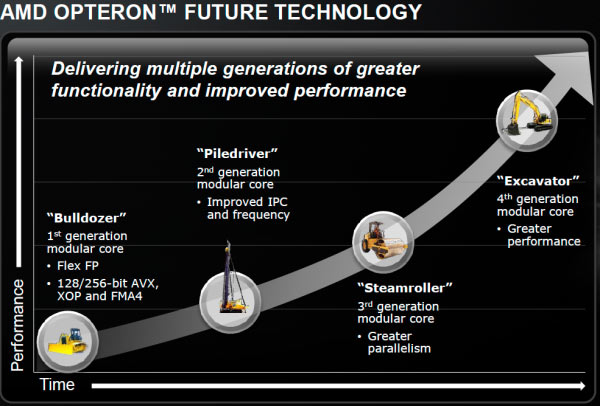

Bulldozer Architecture was introduced first around 2005, and the current (2-nd generation) processor is called Piledriver (introduced in 2012) that formed the CPU foundation for AMD’s Trinity APU. By the end of the year we’ll also see a high-end desktop CPU without processor graphics based on Piledriver.

Piledriver basically brought down power usage by upto 20%, without compromise in performance. Moreover, there were improvements in scheduling efficiency, prefetching and branch prediction.

Steamroller carries on the same trend with ‘tock’ cycle reducing the transistor size to 28nm.

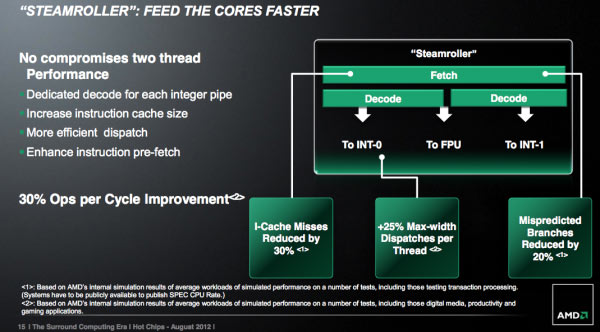

Front End Improvements

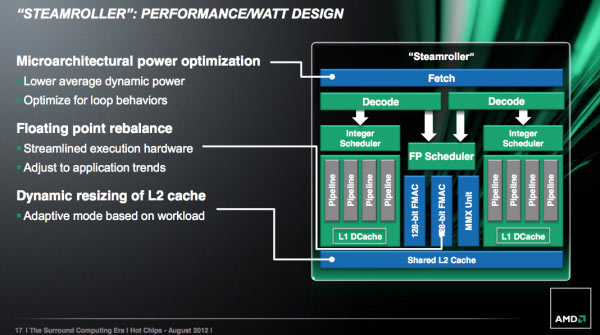

2012 Piledriver suffered from one major issue with shared fetch and decode hardware. Steamroller addresses this by duplicating the decode hardware in each module. with the improvements, each core has its own 4-wide instruction decoder, and both decoders can operate in parallel rather than alternating every other cycle. However, this doesn’t mean it would double the performance, but improvements would be 1.6x even under worst conditions. All this performance comes at minor value-add to power consumption.

Steamroller inherits the perceptron branch predictor from Piledriver, but it has higher buffer, and better in performance, hence more suited for heavy server loads.

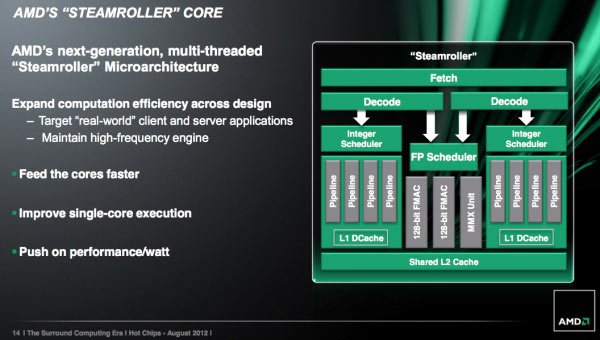

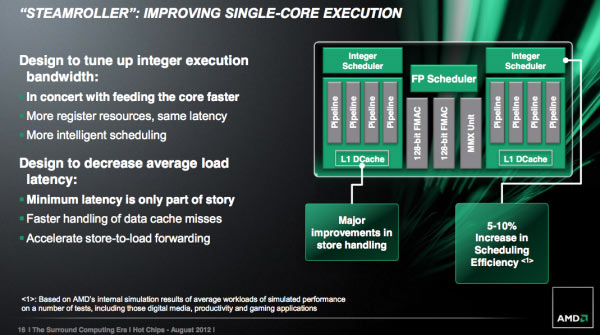

Compute, Execution Improvements

The FPU gets smaller, MMX unit shares some hardware with the 128-bit FMAC pipes. So basically the overall pipeline reduces, and results in lower power consumption.

The integer and floating point register files are bigger in Steamroller. Load operations (two operands) are also compressed so that they only take a single entry in the physical register file, which helps increase the effective size of each RF and hence the increase in performance.

The scheduling windows also increased in size, which should enable greater utilization of existing execution resources.

Steamroller is also better at detecting interlocks, cancelling the load and getting data from the store.

Cache Improvements

The shared L1 cache (used for caching instructions) has also grown in size to make more instructions readily available for computation. It has grown from 2-way 64KB L1 instruction cache and larger L1 Cache can help reduce i-cache misses by up to 30%.

Steamroller now features a decoded micro-op queue, which is nothing but a cache where the address and decoded op are both stored. This queue (we call it cache) helps offload Front-end, and even turns off Front-end to save power when request for an address is present in the queue. This is quiet similar to Intel’s Sandybridge design.

The L2 cache in Streamroller is dyanmically resizable based on workload and hit rate in the cache, and can help save power by cutting them off when load isn’t that much. This switching happens in 0.25x time of cache clock, so there is almost no delay powering them off or on during normal operation.

These L2 optimizations improve the power efficiency. This also is very important thing for mobile applications where CPU essentially does small tasks and then goes back to sleep in couple of milliseconds. L2 cache resizing provides no performance benefits but battery efficiency is way too notable for mobile applications.

Steamroller also reduces L2 / L3 read/write latencies, but the difference is small.

Verdict

Going by the standard Tick-Tock evolution, AMD Piledriver improved Power efficiency, Streamroller should do better in the performance department. AMD’s tech specs definitely hint in that direction but we’ll have to see how it stacks up against Intel’s Haswell in 2013, when its released.

Future: AMD Excavator in 2014

AMD Excavator, when released, would go further to be power efficient. It would save power by not having to route clocks and signals as far, while the area savings are a result of the computer automated transistor placement/routing and higher density gate/logic libraries. By doing this, you’re no longer able to achieve the highest CPU frequency, but the sacrifice is worth it however because in power constrained environments (e.g. a notebook) you won’t hit max frequency regardless, and you’ll instead see a 15 – 30% energy reduction per operation.

We write latest and greatest in Tech Guides, Apple, iPhone, Tablets, Android, Open Source, Latest in Tech, subscribe to us @geeknizer on Twitter OR Google+ or on Facebook Fanpage

loading...

loading...