Multi-core, initially targeted for Servers, eventually, became part of our desktops and portables. But what is still lacking is “efficient parallelization” of programs — Our modern Multicore applications still don’t take the best out of the hardware due to the inherent dependencies.

Multi-core, initially targeted for Servers, eventually, became part of our desktops and portables. But what is still lacking is “efficient parallelization” of programs — Our modern Multicore applications still don’t take the best out of the hardware due to the inherent dependencies.

Some programs are difficult to parallelize, including word processors and Web browsers. These programs operate much like a flow chart – with certain program elements dependent on the outcome of others. Such programs would only utilize one core, most of the time, minimizing the benefit of multi-core chips.

But NC State researchers have developed[PDF] a technique that allows hard-to-parallelize applications to run in parallel, by using non-traditional approaches of “breaking programs into threads”. The technique is presented under a paper called “MMT: Exploiting Fine-Grained Parallelism in Dynamic Memory Management”

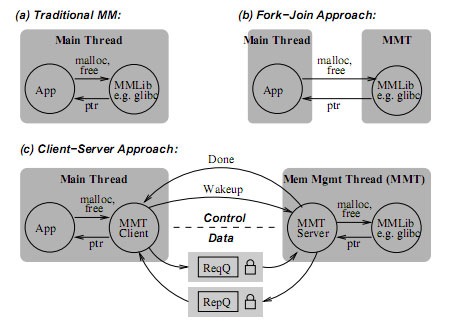

Normally, a program would perform a computation, then perform a memory-management operations (like freeing up, allocating memory) which repeats over and over again, throughout the execution. And, for hard to parallelize applications, both of these steps have to be performed in a single core, thereby spoiling the hardware advantage.

But the new work by North Carolina State University has come over this limitation by removing the memory-management step from the process, and running it as a “totally separate thread”. As a result, the computation thread and memory-management thread execute parallelly, employing more cores.

How much is the performance gain? They claim to have achieved 20% performance adavantage by doing this alone. But practically this could vary between 18 – 30% coz memory operations depend on the fragmentation, and are Never linear functions viz. O(1) or O(n).

This memory-management would give more advantages than just improving speeds. It’s easier (and less costly) to fuel up security features by offloading management functions that could identify anomalies in program behavior, or perform additional security checks, on another core. When used with memory management library called PHKmalloc, for example, MMT can limit the slowdown imposed by some of the library’s security features. These security checks and features are very expensive, slowing down benchmarks by 21% on average, and by 44% in the worst case. But when done with MMT, there’s no practical loss

In this methodology, the computational thread notifies the memory-management thread about when a memory-management function needs to be performed — effectively executing the allocation in parallel. And going further, when the computational thread no longer needs certain data, it informs the memory-management thread for the same and marked memory is freed in parallel. Closely looking, the latter is similar to GC thread in Java, the difference being it’s manual nature ( instead of automatic collection of de-referenced objects).

The paper, “MMT: Exploiting Fine-Grained Parallelism in Dynamic Memory Management,” will be presented on April 21st at the IEEE International Parallel and Distributed Processing Symposium in Atlanta.

via Infow

We write about Latest in tech: Google, iPhone, Gadgets, Open Source, Programming. Grab them all@taranfx on Twitter or below:

loading...

loading...